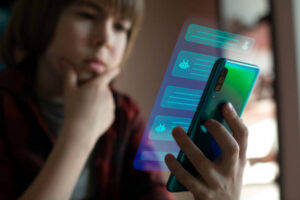

The widespread adoption of AI chatbots among young people has sparked serious concerns about their impact on child safety.

Across the pond, seven technology firms have been questioned by a US regulator about the way their artificial intelligence (AI) chatbots interact with children, and how they can ensure child protection.

Across the pond, seven technology firms have been questioned by a US regulator about the way their artificial intelligence (AI) chatbots interact with children, and how they can ensure child protection.

The Federal Trade Commission (FTC) has asked tech firms Alphabet, OpenAI, Character.ai, Snap, XAI and Meta about how they monetise their products and whether they have safety measures in place, particularly in relation to child safety.

There are widespread concerns that children may be particularly vulnerable when using AI chatbots, since the AI can mimic human conversations and emotions, often presenting themselves as friends to the children.

What do children use AI chatbots for?

Many children use AI chatbots for help with homework and creative tasks as well as for games and entertainment.

Many children use AI chatbots for help with homework and creative tasks as well as for games and entertainment.

Increasingly, however, children are using AI chatbots and companions for advice, emotional support and companionship.

In fact, a recent report from Common Sense Media in the US found that 1 in 3 teenagers have used AI companions for social interaction and friendships, including role-playing, romantic interactions, emotional support, friendship, or conversation practice.

The same proportion said they found conversations with AI chatbots to be as satisfying or more satisfying than those they have with real-life friends.

Experts suggest this may be because the AI chatbots respond without judgment, treat the children as though they are always right, make them feel heard, and make them the centre of attention.

Indeed, the Common Sense Media report found that 14% of teens that use AI companions appreciate the nonjudgmental interaction, while 17% said they valued their constant availability.

Use of AI companions represents ‘mainstream teen behaviour’

The report, ‘Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions’, looked at the use of social AI companions among users aged 13-17. It found that this has become “mainstream teen behaviour,” and revealed:

- Almost three quarters of teens (72%) have used AI companions at least once

- Over half (52%) use AI companions at least a few times per month

- A third of teen users have chosen to discuss important or serious matters with AI companions instead of real people

This behaviour worries experts, who caution that AI tools can sometimes share unsafe evidence, miss signs of serious mental health issues and misuse personal information.

This behaviour worries experts, who caution that AI tools can sometimes share unsafe evidence, miss signs of serious mental health issues and misuse personal information.

These concerns can be shared by young users, with the Common Sense Media report revealing that 1 in 3 teen users reported having felt uncomfortable with something an AI companion has said or done.

James P. Steyer, Founder and CEO of Common Sense Media, warned that a generation of children are “replacing human connection with machines, outsourcing empathy to algorithms, and sharing intimate details with companies that don’t have kids’ best interests at heart.”

AI risks to child safety ‘outweighs benefits’

In April this year, Common Sense Media also published their Social AI Companions Risk Assessment, which recommended that no one under the age of 18 should use them.

In April this year, Common Sense Media also published their Social AI Companions Risk Assessment, which recommended that no one under the age of 18 should use them.

One of the key takeaways of the report was that “the risks far outweigh any potential benefits.”

They warned that AI platforms “easily produce harmful content including sexual misconduct, stereotypes and suicide / self-harm encouragement.”

In a press release for the ‘Talk, Trust and Trade-offs’ report, they concluded:

“While teens may initially turn to AI companions for entertainment and curiosity, these patterns demonstrate that the technology is already impacting teens’ social development and real-world socialisation.

“Our findings of mental health risks, harmful responses and dangerous “advice,” and explicit sexual role-play make these products unsuitable for minors.

“For teens who are especially vulnerable to technology dependence – including boys, teens struggling with their mental health, and teens experiencing major life events and transitions – these products are especially risky.”

What steps are AI tech firms taking to protect child safety?

It’s these kinds of warnings that have prompted the FTC to inquire about “how AI firms are developing their products and the steps they are taking to protect children.”

It’s these kinds of warnings that have prompted the FTC to inquire about “how AI firms are developing their products and the steps they are taking to protect children.”

It also comes amid multiple lawsuits filed against AI companies by families who believe their teenage children died by suicide as a result of prolonged conversations with AI chatbots.

In California, the Raine family are suing OpenAI following the death of their 16-year-old son Adam. They allege that their ChatGPT chatbot encouraged Adam to take his own life by validating his “most harmful and self-destructive thoughts.”

Meta has also come under fire after it was revealed that internal guidelines had previously permitted AI companions to engage in “romantic or sensual” conversations with minors.

The FTC has asked AI technology firms to provide information about how they develop and approve characters and the measures they take to assess impacts on child safety and enforce age restrictions.

Parents can support children to use AI safely

This is a rapidly developing new technology, and its adoption among children and young people has already been significant.

This is a rapidly developing new technology, and its adoption among children and young people has already been significant.

While tech firms are being asked about how they balance profits with child safety concerns, parents need to ensure they are fully informed about AI and swiftly implement any safety measures they can.

The NSPCC provides these 6 top tips about supporting children to use AI safely:

- Talk about where AI is being used – have open conversations with your child about where they see AI tools and content online. Talk about the risks and benefits they are experiencing

- Remind them that not everything is real – take the opportunity to remind children that not everything they view online is real and that much of what they see may have been edited. Some indicators that an image or video is AI generated include an overall ‘perfect’ appearance and body parts or movements that do not appear ‘true to life’

- Discuss misuse of generative AI – address how generative Ai can be misused to create harmful content, and make sure your child knows that its not ok to create content that harms other people. They should also know that they can report this if they experience it

- Remind them to check their sources – using AI chatbots and summaries to get quick answers can be very helpful, but it’s important to check that the information has come from a reliable source. The sources used should be listed and links will often be provided so they can be checked. If sources are not listed or are unreliable, children should be aware that the information may be inaccurate and they should be encouraged to check a trusted website themselves

- Signpost them to safe sources of health and wellbeing advice – more and more children are using AI chatbots or companions as an alternative to a therapist or counsellor, while many will use AI summaries when trying to find the answer to a health query. It’s important they access evidence-based advice, so make sure they know about child-friendly, reliable sites such as Childline.

- Make sure they know where they can go for help – let your child know they can talk to you if they have any worries. Encourage them to go another safe adult like a teacher if they are reluctant to come to you, or to contact free, confidential helplines like Childline

Child safety training and support

First Response Training (FRT) is a leading national training provider delivering courses in subjects such as health and safety, first aid, fire safety, manual handling, food hygiene, mental health, health and social care, safeguarding and more.

First Response Training (FRT) is a leading national training provider delivering courses in subjects such as health and safety, first aid, fire safety, manual handling, food hygiene, mental health, health and social care, safeguarding and more.

They work with a large number of early years, schools and childcare providers, as well as colleges, youth groups and children’s services. Their courses include Safeguarding Children.

A trainer from FRT says:

“Safeguarding children means protecting them off and online and being aware of new and developing technologies and how children may be interacting with these, and how they intersect with issues of child safety and protection.

“It’s so important that we are mindful of the harms children and young people are exposed to when they use technology and that there are mechanisms in place to protect them, and to offer them help and support when they need it most. Children who are anxious about technology and things they have seen or experienced online need to feel they have a safe space where they can talk about their worries and experiences.

“It’s vital that anyone who works with children and young people is aware of their responsibility for safeguarding children and that they can recognise the signs that indicate a child may be experiencing harmful content online9, and know the correct action to take in response.”

For more information on the training that FRT can provide, please call them today on freephone 0800 310 2300 or send an e-mail to info@firstresponsetraining.com.